NVIDIA RTX A2000 Makes RTX Technology Available to More Professionals

With its compact, power-efficient design, the new NVIDIA® RTX™ A2000 GPU fits more desktops while accelerating AI and ray tracing for design workflows.

The powerful real-time ray tracing and AI acceleration capabilities of NVIDIA’s RTX technology has transformed design and visualization workflows for the most complex tasks, like designing airplanes and automobiles, visual effects in movies and large-scale architectural design.

The new NVIDIA RTX A2000 — NVIDIA’s most compact, power-efficient GPU for a wide range of standard and small-form-factor workstations — makes it easier to access RTX from anywhere.

The new NVIDIA RTX A2000 — NVIDIA’s most compact, power-efficient GPU for a wide range of standard and small-form-factor workstations — makes it easier to access RTX from anywhere.

NVIDIA RTX A2000 is designed for everyday workflows, so professionals can develop photorealistic renderings, build physically accurate simulations and use AI-accelerated tools. With it, artists can create beautiful 3D worlds, architects can design and virtually explore the next generation of smart buildings and homes, and engineers can create energy-efficient and autonomous vehicles that will drive us into the future.

The NVIDIA RTX A2000 GPU has 6GB of memory capacity with Error Correction Code (ECC) to maintain data integrity for uncompromised computing accuracy and reliability, which especially benefits the engineering, healthcare, financial services, and scientific and technical disciplines.

With remote work part of the new normal, simultaneous collaboration with colleagues on projects across the globe is critical. NVIDIA RTX technology powers Omniverse, our collaboration and simulation platform that enables teams to iterate together on a single 3D design in real time while working across different software applications. The NVIDIA RTX A2000 will serve as a portal into this world for millions of designers.

Take a deep dive into the segment redefining NVIDIA RTX A2000. Anticipated availability from PNY’s extensive system builder community and reseller ecosystem starts in October.

NVIDIA Brings Millions More into the Metaverse with Expanded Omniverse Platform

Introduction of Blender, Upcoming Adobe 3D App Integration Enables Creators Everywhere

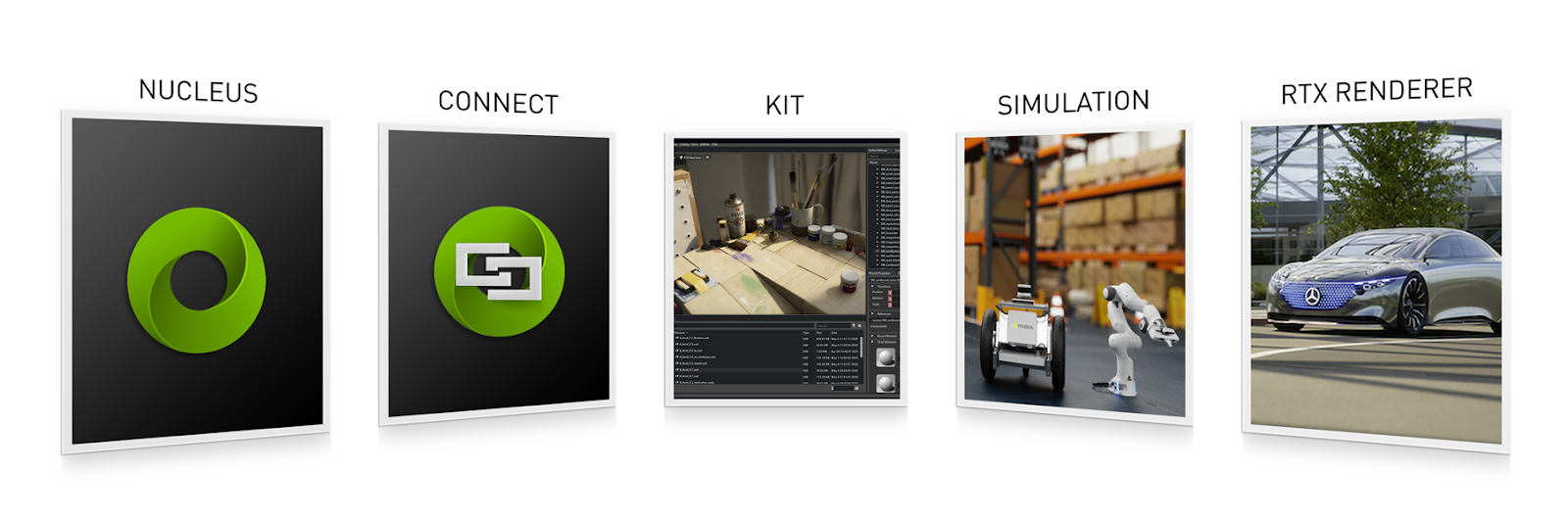

NVIDIA announced a major expansion of NVIDIA Omniverse—the world’s first simulation and collaboration platform that is delivering the foundation of the metaverse—through new integrations with Blender and Adobe that will open it to millions more users.

Blender, the world’s leading open-source 3D animation tool, will now have Universal Scene Description (USD) support, enabling artists to access Omniverse production pipelines. Adobe is collaborating with NVIDIA on a Substance 3D plugin that will bring Substance Material support to Omniverse, unlocking new material editing capabilities for Omniverse and Substance 3D users.

NVIDIA Omniverse makes it possible for designers, artists and reviewers to work together in real time across leading software applications in a shared virtual world from anywhere. Professionals at over 500 companies are evaluating the platform. Since the launch of its Open Beta in December, Omniverse has been downloaded by over 50,000 individual creators.

NVIDIA Omniverse connects worlds, helping enable the vision of the metaverse to become a reality. With input from developers, partners and customers, NVIDIA is advancing this revolutionary platform so that everyone from individuals to large enterprises can work with others to build amazing virtual worlds that look, feel and behave just as the physical world does.

The metaverse is an immersive and connected shared virtual world and will be built collaboratively across industries. In it, artists can create one-of-a-kind digital scenes, architects can create beautiful buildings and engineers can design new products for homes. These creations can then be taken into the physical world after they have been perfected in the digital world.

OMNIVERSE ECOSYSTEM EXPANDS

NVIDIA Omniverse has a Swiftly Growing Ecosystem of Supporting Partners.

Key to Omniverse’s industry adoption is its foundation in Pixar’s open-source USD—the foundation of Omniverse’s collaboration and simulation platform—enabling large teams to work simultaneously across multiple software applications on a shared 3D scene. This open standard frameworkn gives software partners the ability to connect to Omniverse, whether through USD adoption and support, or building an Omniverse Connector - a plugin to the platform.

Apple, Pixar and NVIDIA are all committing to advancing USD and have collaborated to bring advanced physics capabilities to the framework, embracing open standards to provide 3D workflows to billions of devices. Blender and NVIDIA have also collaborated to provide USD support to the upcoming release of Blender 3.0 and the millions of artists who use the software.

NVIDIA is further contributing USD and materials support in Blender 3.0 alpha USD branch, whichis available now for creators.

NVIDIA and Adobe are collaborating on a Substance 3D plugin that will unlock new material editing abilities for Omniverse and Substance 3D users. Artists and creators will be able to work directly with Substance Materials either sourced from the Substance 3D Asset platform or created in Substance 3D applications, creating a more seamless 3D workflow.

The NVIDIA Omniverse ecosystem continues to grow, connecting industry-leading applications from software companies such as Adobe, Autodesk, Bentley Systems, Blender, Clo Virtual Fashion, Epic Games, Esri, Golaem, Graphisoft, Lightmap, Maxon, McNeel & Associates, PTC, Reallusion, Trimble, and wrnch Inc.

AVAILABILITY AND PRICING

NVIDIA Omniverse Enterprise is currently in early access. The platform will be available later this year on a subscription basis from NVIDIA and PNY’s partner network (among others). Information on pricing for Omniverse Enterprise is now available.

NVIDIA RESEARCH TAKES BEST IN SHOW FOR REAL-TIME LIVE! WITH DIGITAL AVATARS AT SIGGRAPH

In a mind-bending and blowing demo, NVIDIA researchers stuffed four AI models into a serving of digital avatar technology for SIGGRAPH 21’s Real-Time Live—winning the Best in Show award.

The showcase, one of the most anticipated events at the world’s largest computer graphics conference, held virtually this year, celebrates cutting-edge real-time projects spanning game technology, augmented reality and scientific visualization. It featured a lineup of jury-reviewed interactive projects, with presenters hailing from Unity Technologies, Rensselaer Polytechnic Institute, the NYU Future Reality Lab and more.

Broadcasting live from NVIDIA’s Silicon Valley headquarters, the NVIDIA Research team presented a collection of AI models that can create lifelike virtual characters for projects such as bandwidth-efficient video conferencing and storytelling.

The demo featured tools to generate digital avatars from a single photo, animate avatars with natural 3D facial motion and convert text to speech.

Making digital avatars is a notoriously difficult, tedious and expensive process, but with AI tools there is an easy way to create digital avatars for real people as well as cartoon characters. It can be used for video conferencing, storytelling, virtual assistants and many other applications.

In the demo, two NVIDIA research scientists played the part of an interviewer and a prospective hire speaking over video conference. Over the course of the call, the interviewee showed off the capabilities of AI-driven digital avatar technology to communicate with the interviewer.

The researcher playing the part of interviewee relied on an NVIDIA RTX Laptop throughout, while the other used a desktop workstation powered by NVIDIA RTX A6000 GPUs. The entire pipeline can also run on GPUs in the cloud.

While sitting in a campus coffee shop, wearing a baseball cap and a face mask, the interviewee used the Vid2Vid Cameo model to appear clean-shaven in a collared shirt on the video call. The AI model creates realistic digital avatars from a single photo of the subject—no 3D scan or specialized training images are required.

The digital avatar creation is instantaneous, allowing researchers to quickly create a different avatar by using a different photo, a capability that was demonstrated by using two additional images over the course of the demo.

Instead of transmitting a video stream, the researcher’s system sent only his voice—which was then fed into the NVIDIA Omniverse Audio2Face app. Audio2Face generates natural motion of the head, eyes and lips to match audio input in real time on a 3D head model. This facial animation went into Vid2Vid Cameo to synthesize natural-looking motion with the presenter’s digital avatar.

The models, optimized to run on NVIDIA RTX GPUs, easily deliver video at 30 frames per second. It’s also highly bandwidth efficient, since the presenter is sending only audio data over the network instead of transmitting a high-resolution video feed.

Taking it a step further, the researcher showed that his surroundings got too loud, the RAD-TTS model could convert typed messages into his voice—replacing the audio fed into Audio2Face. This breakthrough text-to-speech, deep learning-based tool can synthesize lifelike speech from arbitrary text inputs in milliseconds.

SIGGRAPH runs until August 13. You can view a video of NVIDIA at SIGGRAPH highlights on YouTube, and also watch an NVIDIA premiere documentary “Connecting in the Metaverse: The Making of the GTC Keynote”.

It can be clearly stated that NVIDIA extended their visual computing, AI-enhanced applications, and remote collaboration (Omniverse) leadership at SIGGRAPH 21.