Is data transfer in your enterprise network impacting the performance of your AI applications? If so, it might be time to consider upgrading to NVIDIA Magnum IO™. As companies are refining their data and becoming intelligence manufacturers. Data centers are becoming AI factories enabled by accelerated computing—which has sped-up computing by a million-x. However, accelerated computing requires accelerated IO.

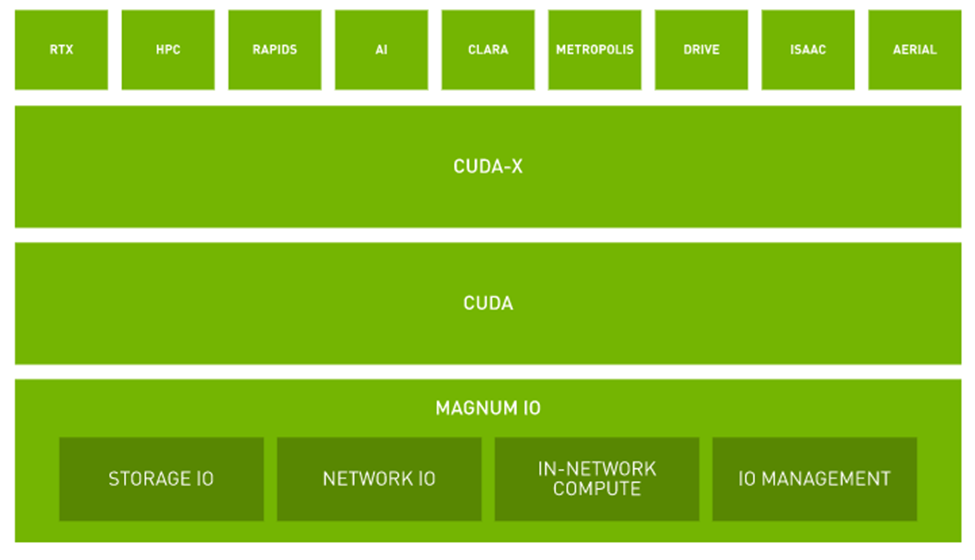

NVIDIA Magnum IO is the architecture for parallel, intelligent data center IO. It maximizes storage, network, communications for multi-node, multi-GPU applications, which encompasses large language models, recommender systems, imaging, simulation, and scientific research.

NVIDIA Magnum IO consists of IO acceleration technologies and software libraries designed specifically for HPC networking. It combines NVIDIA's high-speed networking technology with advanced software features that can improve the efficiency and effectiveness of AI, simulation, and HPC for businesses.

General purpose enterprise ethernet networks are fine for supporting traditional business applications like customer relationship management (CRM) systems, enterprise resource planning (ERP) systems, human resource management (HRM) systems, or e-commerce systems. That’s when lots of traffic is going lots of places but how fast it gets handled is not as critical as long as the work is completed within seconds, minutes, or hours.

But when enterprises want to use inference models on text or speech or video, and they need to mine the mountains of customer data to train models about consumer behavior, then they turn to accelerated GPU computing, and accelerated GPU IO to improve performance and reduce latency.

Improve Data Transfer Speed with Magnum IO

One of the key benefits of NVIDIA Magnum IO is its ability to improve data transfer speed for AI applications across a network. It's a full stack solution that involves specialized network hardware, like network adapters and switches to perform in-network computing which increases data throughput 2X for IO intensive GPU calculations.

With InfiniBand networking technology, NVIDIA Magnum IO is able to transfer data at much faster rates than traditional networking solutions. GPUs can talk directly to other GPUs and to stored data while bypassing the man-in-the-middle, the CPU, which is much slower at the serious math used in AI. This can be especially useful in environments where large amounts of data need to be transferred quickly, such as when dealing with big data analytics.

Better Data Analytics

Enterprises need to effectively analyze and visualize data for insights. A vital part of this process broadly referred to as data analytics requires performing ETL (Extract, Transform, and Load) operations to clean and prepare the data from different sources to train AI-powered applications.

Data analytics often consumes the majority of the time in AI application development since the scale-out solutions with commodity CPU only servers get bogged down by lack of scalable computing performance as large datasets are scattered across multiple servers.

For example, combining InfiniBand networking, Magnum IO software, GPU-accelerated Spark 3.0, and NVIDIA RAPIDS™, the NVIDIA data center platform is uniquely able to accelerate these huge workloads at unprecedented levels of performance and efficiency at rack and data center scales.

More Benefits for Enterprise AI Users

In addition to improving data access speed and AI application performance, NVIDIA Magnum IO also offers a range of other benefits for enterprise AI users.

- It can help reduce network management's complexity by providing a single platform for managing and measuring the IO performance of multiple networking components. This can save businesses time and resources that would otherwise be spent tuning and trouble-shooting multiple systems.

- It has the ability to scale with the needs of enterprise AI. As a business grows and the volume of data being transferred increases, NVIDIA Magnum IO is able to scale up and scale out to meet these demands. This can help to ensure that an enterprise's accelerated computing and networking infrastructure is always able to handle the demands placed on it, even as the business grows.

Overall, NVIDIA Magnum IO is a powerful solution for improving the performance and efficiency of enterprise AI:

- Its high-speed networking technology

- Advanced storage IO

- IO management features

- Ability to scale with the needs of an enterprise

The full stack hardware and software accelerated computing data center platform can run enterprise AI workloads in a fraction of the space, for a fraction of the power, and at a fraction of the cost of traditional CPU only servers on traditional networks. Your mileage may vary but if you're tired of slow data transfer dragging your enterprise AI, it might be time to give NVIDIA Magnum IO and accelerated computing a try.

About PNY Technologies

PNY Technologies offers Magnum IO and NVIDIA networking solutions for high-performance computing and artificial intelligence workloads. These solutions provide fast data processing and low-latency, high-bandwidth connections for efficient network performance. For more information visit www.pny.com/networking-hpc or contact GOPNY@PNY.COM.