In this blog post, I will go over the hardware considerations I used when putting together a system for benchmarking GPU performance for Deep Learning using Ubuntu 18.04, NVIDIA GPU Cloud (NGC) and TensorFlow. Keep in mind that everyone will have different budgets and requirements for their own systems, which can and will result in a wide range of configurations. My particular list should serve only as a reference; your system will likely be differ ent based on your own requirements.

ent based on your own requirements.

Before we dive into the details, let’s go over what we are seeking to accomplish. Our goal is to build a system to test the compute performance between different GPUs; therefore the GPU should be the only variable that changes between the different test runs. To ensure the consistency of our tests, we will remove any potential bottlenecks that will negatively impact GPU performance.

Here are areas that have the potential to slow down the GPU, preventing it from running at full speed:

- Processor

- Memory

- PCI-Express Lanes

- Storage

- Power

- Operating Environment

Processor

The Processor is your overall data traffic controller, so you want to ensure that you have a processor that is equipped with sufficient processing power to keep data flowing and enough PCI-Express lanes to support your GPUs. I suggest a minimum of four cores and support for 20 PCI-Express lanes, which is not a hard requirement to meet these days with processors from both Intel and AMD.

Memory

My rule of thumb for system memory is that you should have at least as much system memory as your GPU's frame buffer, but ideally, you will want system memory to be double the amount of your GPU's frame buffer. This way, your system will store enough data in the memory to feed your GPUs without having to read directly from your data storage devices.

PCI-Express Lanes

The goal is to provide your GPUs access to full PCI-Express 3.0 x16 bandwidth.

GeForce and Quadro graphics cards will operate with x8 and even x4 PCI-Express lanes but this is not ideal for a deep learning platform especially for benchmarking. If you intend to test and operate multiple GPUs in a system, this is a particular requirement you should pay extra attention to.

Storage

M.2 NVMe drives are preferred, but SATA based SSDs will work too. Deep learning is all about moving a large amount of small data to and from GPUs, which is not an ideal workload for spinning hard drives.

Power

Make sure you have sufficient power to support all your components; this deserves extra attention if you intend to test multi-GPU configurations with high-TDP CPUs.

Operating Environment

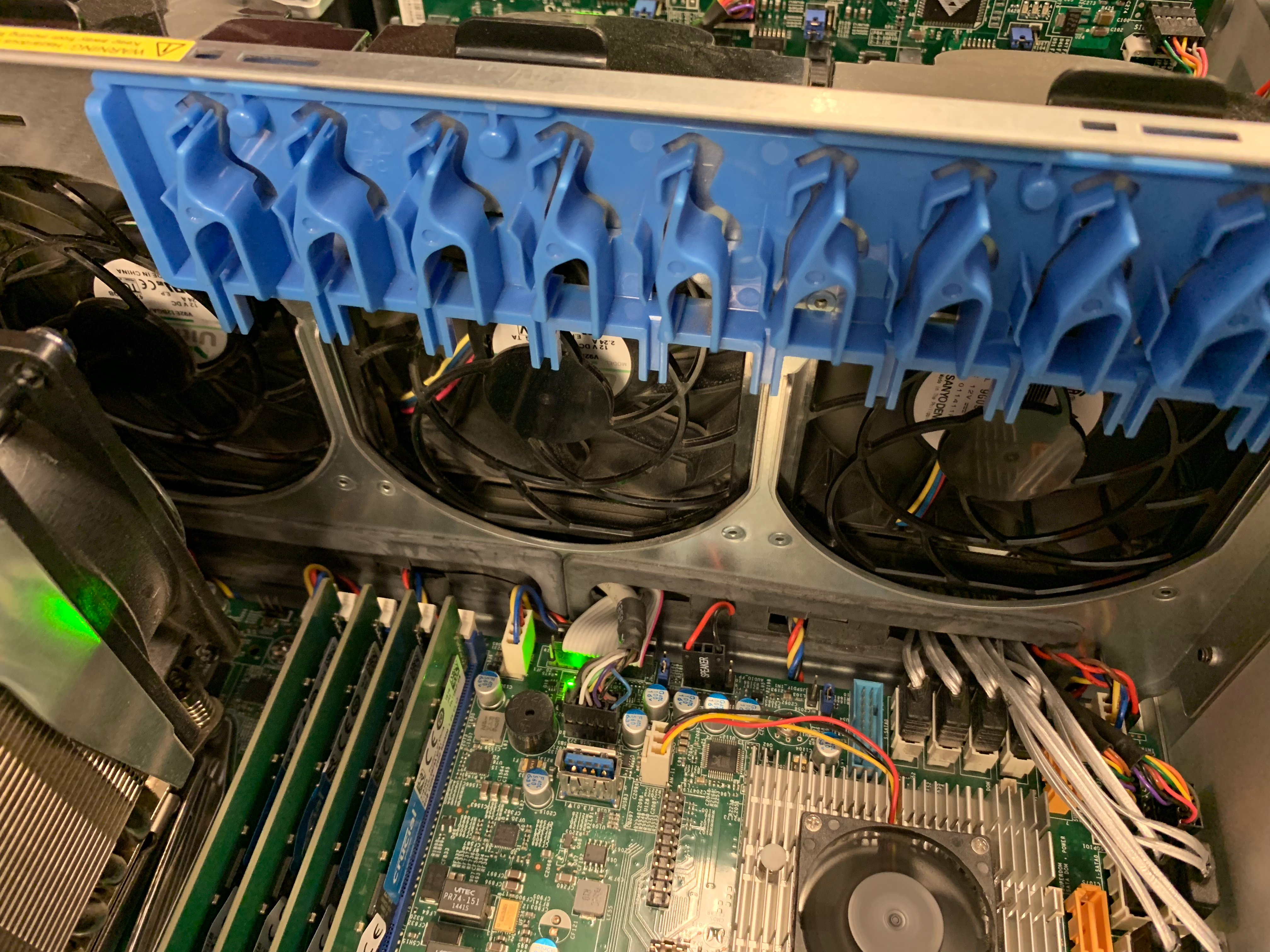

Modern GPUs and CPUs are very sensitive to temperature; these critical components will provide an extra boost of performance when operating at ideal temperatures but throttle down to protect themselves if the temperature is high. A good test environment should have ample of air flow across the components to maintain a consistent operating environment.

To finish up this blog post, let me go over my own configuration:

My Configuration

Processors: Dual Intel(R) Xeon(R) CPU E5-2680 v3 @ 2.50GHz

Memory: 128GB DDR4 Registered ECC memory

Storage: Dual PNY SATA 480GB SSDs

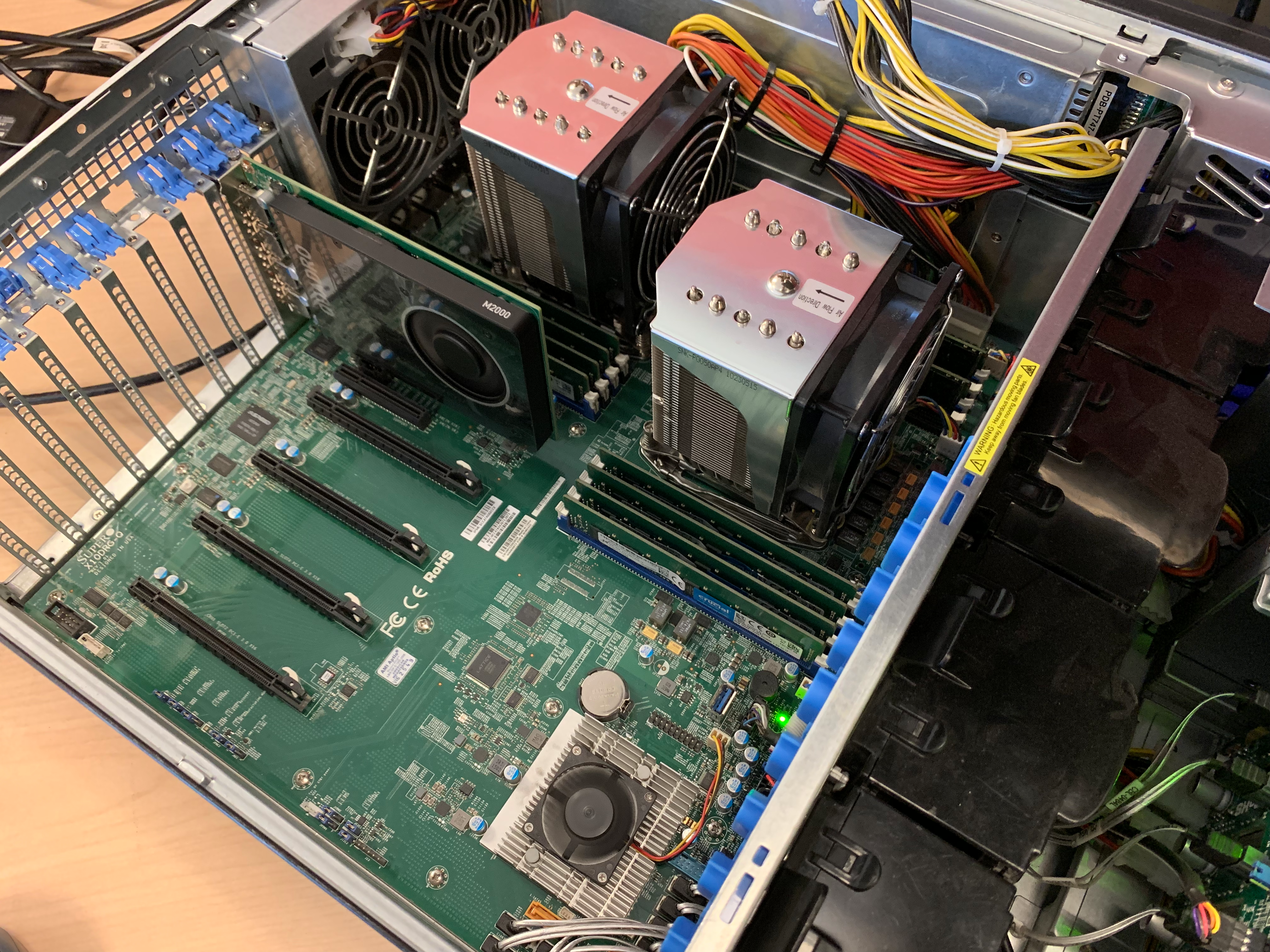

To house my components, I am using a re-purposed SuperMicro 747TQ Workstation, which is a trusted and reliable system platform that provides the following benefits:

PCI-Express Lanes: This system provides a total of four PCI-Express x16 slots at full bandwidth; this means I can reliably operate up to four GPUs.

Power: Total of 1620 W Redundant power

Operating Environment: Four high power 92mm fans directly situated in front of the key components to keep everything cool.

In the next blog post, I will go over how to set up an Ubuntu 18.04 LTS environment, connect it to the NVIDIA GPU Cloud, then pull the necessary TensorFlow container, and finally execute the ResNet50 image classification benchmark script.

Stay tuned!